Cloud data warehouses: SCD Type 2 connected directly to the fact table

A dream star schema. Historical data is connected directly to the fact table

I've been working through the Microsoft DP-500 training: Exam DP-500: Designing and Implementing Enterprise-Scale Analytics Solutions Using Microsoft Azure and Power BI. Having recently gone through a Devabout-dp-50Ops training for work, it only makes sense that I would want to broaden my knowledge of cloud tools.

Skip ahead to read more about the DP-500 training

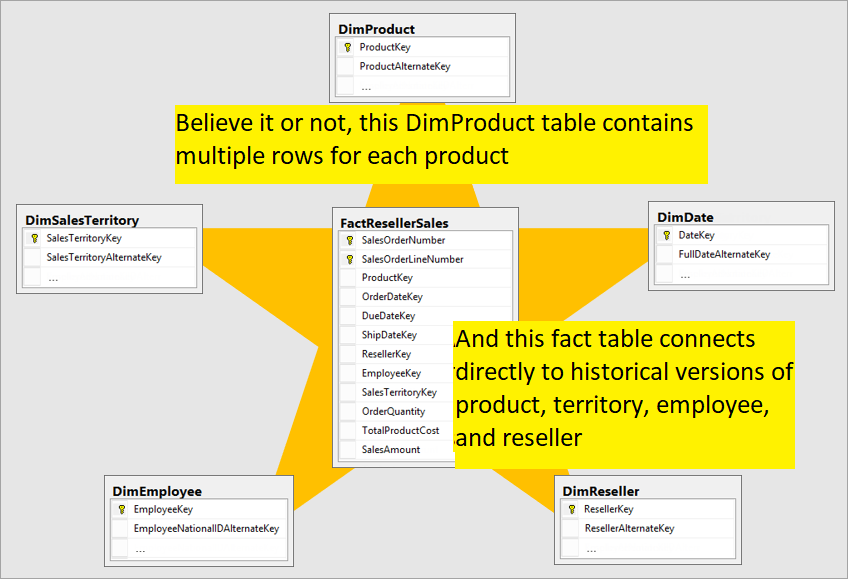

In the section Design a data warehouse schema, I was surprised (and delighted) to see that Slowly Changing Dimension (SCD) Type 2 is presumed as the format for dimensions— and that these historical dimensions were connected directly to the fact tables. Up to this point, most data warehouses I have run into have used SCD Type 1 as the default, and then required analysts to do gymnastics to connect to a historical dimension. According to Wikipedia, this would be Type 2 / type 6 fact implementation. I notice in this training that Microsoft does not use the term SCD at all, and their sample dimension only has the surrogate key and alternate key without a current flag, start date, or end date.

I don’t want to get bogged down in all the complexities. To put it simply, in many traditional data warehouses, the data warehouse connects using a surrogate key for the most current version of a dimension. There may also be a historical dimension, but to connect the fact table to that dimension requires looking at the value which is between the start and end date of a particular row in the dimension. OOF! Instead, Microsoft Learn presents as best practice of tracking all historical changes within the dimension, so that the related data will represent the state at the time of the transaction. This is so much simpler! As the Wikipedia article on Type 2 with fact implementation explains it: “the surrogate key from the dimension is put into the fact table in place of the natural key when the fact data is loaded.”

With this design in place, it is easy to report on sales by sales team even when salespeople move between teams. To report on sales by sales person, you only need to use the alternative key, most likely a business key like an employee number, instead.

The training page spells out the benefit in this way:

“Attributes of entities may change over time - for example, a customer might change their address. Since the data warehouse is used to support historic reporting, you may want to retain a record for each instance of an entity at multiple points in time; so that, for example, sales orders for a specific customer are counted for the city where they lived at the time the order was placed. In this case, multiple customer records would have the same business key associated with the customer, but different surrogate keys for each discrete address where the customer lived at various times.”

This is a data warehouse designed with reporting as the goal. It makes reporting on events by attribute at the time simple. While it was easier and cheaper to create dimensions with only the current state, cloud implementations are improving the usability of data warehouses.

SCD Type 2 in Power BI training

I see that in the star schema training of Power BI that this implementation of SCD Type 2 is recommended as well. It does not discuss the case of using a dimension table which only has the current version and then trying to connect to a second historical dimension even though this is a common pattern with traditional data warehouses. A while back, I wrote blog post suggesting one way of fixing the fact table in this situation.

In Power BI training, SCD Type 2 is shown as being implemented with multiple rows for each Employee and connected directly to the fact table. Be advised: that while this is ideal, a pattern with traditional data warehouses is that the fact table does not connect directly to historical dimensions.

*

A few words about the DP-500 training

The DP-500 feels like it's designed to make analysts who are Power BI specialists more "T-Shaped" by complementing their knowledge of Power BI with a broad knowledge of Microsoft cloud environments:

Microsoft Purview for data governance

Azure Synapse Analytics, including:

Types of analytics: descriptive, diagnostic, predictive, prescriptive

creating data pipelines

Cloud Shell PowerShell

data lakes with structured, semi-structured, and unstructured data and patterns of ingest-tranform-load (ELT) with serverless SQL pools

Azure Synapse Link to synchronize between Azure Cosmos DB, Azure SQL Database, SQL Server, Microsoft Power Platform Dataverse, and analytical data storage queried in Azure Synapse Analytics

Azure Machine Learning

Azure Data Explorer including Kusto Query Language (KQL)

Analyzing data with Apache Spark (distributed data processing)

configuring (serverless) Spark pools

running Spark code in notebooks:

SQL, Python, Scala, Java, SQL, C#

markdown

graphics packages like Matplotlib and seaborn libraries

using Spark dataframes

working with data in various formats including csv, Parquet, Avro, and others

Analyzing data in a relational data warehouse (Synapse dedicated SQL pool):

using SQL functions of aggregation, RANK, ROW_NUMBER, PARTITION, DENSE_RANK, and NTILE for quartile placement.

using approximate counts with distributed servers

The learning path for the module also includes 2 modules on Power BI